The basics every engineering team should know (but few actually follow)

If you have a website, web app, or mobile app, incidents aren’t a matter of if—they’re a matter of when. Outages, degraded performance, broken deploys, third-party failures… it’s all coming for you. The only question is: Will your team be ready when it happens?

Because it won’t always happen at 2 p.m. when your whole team is online and alert. Sometimes it’ll happen at 2 a.m., when one tired engineer gets paged, groggy and half-awake, trying to fix something critical before users even notice. And when that moment comes, your team needs a system that works under pressure.

I run the incident management program for my engineering org, and this post is a high-level guide to how we think about incidents. Whether you’re new to on-call or trying to make your process better, this will give you a solid starting point.

Let’s walk through the basics—top to bottom.

1. What Is an Incident?

An incident is any unplanned disruption to your service that impacts users or business goals. It doesn’t have to be a full-blown outage. It could be:

- Slow API responses

- Broken login on mobile

- Data syncing issues

- A deploy gone sideways

The goal isn’t to categorize everything perfectly—it’s to recognize when something is broken and treat it seriously.

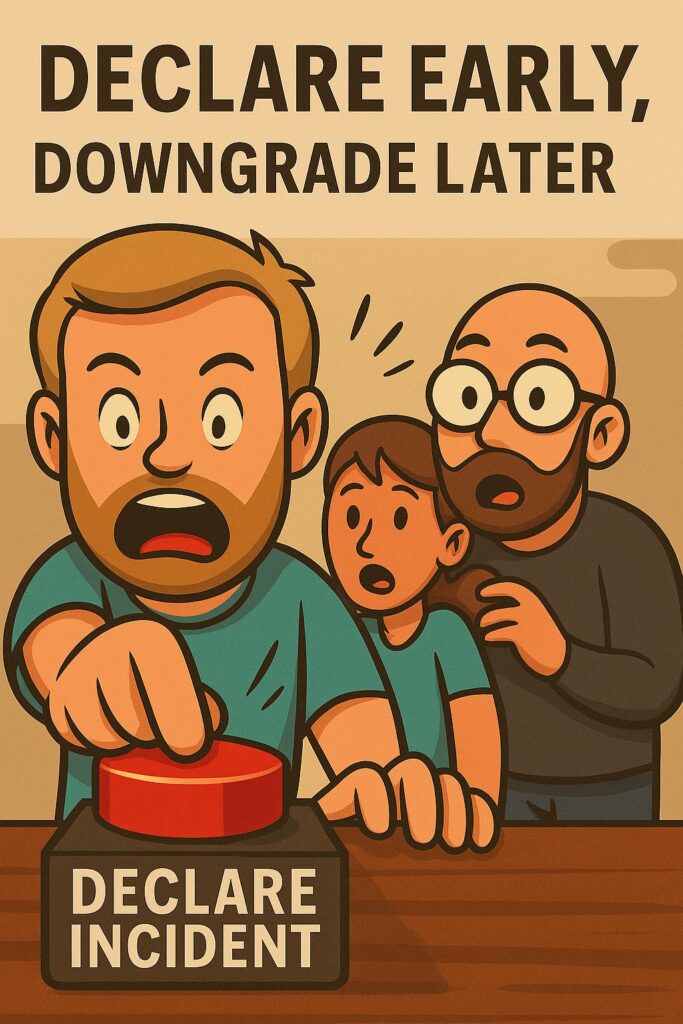

2. Declare It (Don’t Debate It)

One of the biggest problems teams face is hesitation.

“Is this really an incident?”

“Should we just wait and see?”

Here’s the rule: If something feels fishy—and it could impact customers—declare the incident.

You don’t need 100% certainty. You need a feeling deep down in your tum tum that something isn’t righ. Declaring early gives you permission to focus, pull the right people into Slack, and start investigating fast. You can always downgrade it later.

The worst-case scenario? You called it early and everything’s fine. The best-case scenario? You caught a real issue before customers started screaming. That’s a win.

And honestly, few things feel better than thinking something serious is broken—only to realize it was a false alert, a flaky health check, or someone testing in the wrong environment. You relax. The team breathes. You stay ahead of the chaos. That’s the kind of false alarm you’ll gladly take.

🧨 Unpopular Opinion: Not Everything Deserves to Be an Incident

I’ve heard people say, “If an internal process is broken, it should be treated like an incident.”

Now—this totally depends on your org, but personally? I disagree.

Incidents, when run correctly, are incredibly efficient—but also very resource-intensive. You’re pulling engineers out of focus, dropping everything else on the floor, and coordinating real-time under pressure. That kind of response should be reserved for situations that truly require it.

If your CI pipeline is broken, or a reporting dashboard isn’t updating, or a dev tool is flaky, that sucks. But you need to ask: Is this worth activating the incident response system?

When you build a culture of fast, serious responses—where people jump in and treat incidents as top priority—you need to protect that urgency. If you overuse the word “incident” for non-critical things, you’ll burn out your team and water down the meaning.

Save incidents for when things are on fire—especially when customers are impacted. For everything else? Build clear escalation paths, document ownership, and treat it seriously—but don’t cry wolf.

⚡ Pro Tip: Declare Fast with Automation (Or You’re Wasting Time)

At my company, we use PagerDuty—and the Slack integration is a game-changer. When something feels off, we just run /pd trigger, assign the relevant team, and PagerDuty immediately notifies the on-call person. Boom. Incident declared. Everyone’s in the loop.

If you’re still manually pinging people, typing explanations, or copy-pasting into Slack—you’re wasting precious minutes. In the early moments of an incident, automation matters. The faster you declare, assign, and mobilize, the faster you solve it.

If you don’t have this kind of setup yet, now’s the time to fix that.

🧰 Tools to Automate Incident Declaration

- PagerDuty – Gold standard for incident response, on-call scheduling, and Slack/Teams integrations

- Opsgenie – Powerful alerting and incident management, works well with Atlassian tools

- FireHydrant – Built specifically for managing the full incident lifecycle, not just alerts

- Incident.io – Simple Slack-native incident coordination (great for fast-moving teams)

- xMatters – Workflow automation plus incident and alert handling for enterprise teams

Whatever tool you pick, make sure declaring an incident is as easy as typing a single command. Because in the moment, nobody should be fumbling with logins and spreadsheets.

3. Assign Roles Immediately (And Don’t Be a Hero)

Don’t run an incident by committee. You need clear roles—fast:

- Incident Commander – runs point, coordinates updates, keeps everyone focused

- Communicator – posts updates to Slack, status page, execs, and customers

- Investigators – dig into logs, graphs, and systems to find and fix the root cause

On small teams, one person might wear multiple hats—and that’s okay as long as you call it out explicitly. But here’s the rule of thumb that too many teams break:

The Incident Commander should not be the person actively fixing the problem.

Why? Because I’ve been there. It’s 3 a.m., the system is down, alerts are firing, management is pinging you nonstop, and users might already be impacted. You’re deep in the weeds trying to trace the issue—and on top of that, you’re expected to coordinate the response, escalate to other teams, invite people to Slack, and send regular updates.

It doesn’t work. You end up doing both jobs halfway. Critical updates get missed. Stakeholders are left guessing. And nobody feels in control.

If your app is down, don’t try to be the hero. Either run the incident—or fix the issue. Not both. Let someone else lead the coordination while you stay heads-down on solving the problem.

That separation of focus is what makes incident response clean, fast, and repeatable.

4. Communicate Like a Pro

During an incident, silence kills trust. Keep updates flowing:

- Use a dedicated Slack or Teams channel

- Post regular internal updates (every 15–30 min)

- Update the status page if users are impacted

- Loop in stakeholders with clear, calm summaries

If you determine quickly that the incident is affecting customers—or even might be—update the status page immediately. You don’t need to wait for full context. Just say what you know “Investigating performance degradation.” or “Some users may be experiencing errors.”

This lets users know it’s not just them, and buys trust while you work on the fix.

Take a cue from companies like GitLab or GCP—they post quick updates the moment something seems off. Even if it turns out to be a false alarm, users appreciate knowing you’re on it.

And don’t forget your customer support team. Loop them in early. If customers start reaching out, support will be on the front line—and they need clear, timely info to keep communication smooth. When support is aligned with engineering updates, you reduce noise, prevent confusion, and build trust with customers that you know what you are doing.

Even if nothing has changed, say that:

“Still investigating. No ETA yet. We’ll update again in 15.”

5. Fix Fast, Then Learn

The goal is user impact down, systems stable—not perfect code or a full RCA in the moment. Once it’s resolved, schedule a postmortem quickly.

A good postmortem answers:

- What happened?

- What worked well?

- What slowed us down?

- What can we do to prevent this from happening again?

No blame. No finger-pointing. Just a clear path forward.

And here’s the key: this is your chance to buy a tiny bit of bandwidth to make your system better. Add an alert to catch it earlier next time. Wrap a fragile block in a try/catch to log the issue without taking down the whole app. Add a missing test to CI that would’ve stopped this before it shipped.

Mistakes happen. But they shouldn’t happen twice.

6. Create a Culture That Improves Every Time

Incidents suck—but they’re also where your team grows the fastest.

They shine a spotlight on your systems, your communication, and your process gaps. Every incident is a chance to get better.

The best teams don’t avoid incidents—they learn from every single one and bake that learning into how they work.

💡Liked this post?

Get real-world solutions like this in your inbox—join the newsletter.